Article verified for Release 14.1 on March 29, 2025.

This article provides a comprehensive guide on conducting Item analysis using YouTestMe GetCertified. Item analysis allows you to assess the reliability and effectiveness of test questions.

There are four ways to conduct item analysis:

- Predefined Test Report – You can utilize the item reliability report provided by YouTestMe GetCertified. This report offers insights into the performance of each test question, allowing you to assess the reliability and effectiveness of the items.

- Report Builder – The platform also offers a report builder feature that enables you to download all the answers to the questions. With this downloaded data, you can perform item analysis independently, gaining more flexibility and control over the analysis process.

- Statistics on Question Level – Within YouTestMe GetCertified, you have access to detailed statistics at the question level. This feature allows you to evaluate the performance, difficulty, and effectiveness of each question in a test.

- Statistics on Answer Level – You can also analyze statistics at the answer level to understand how candidates interact with each possible answer. This provides deeper insights into the quality and clarity of the answer choices, helping to refine test questions further.

Using predefined test reports

To access the Item Reliability report, follow the next steps:

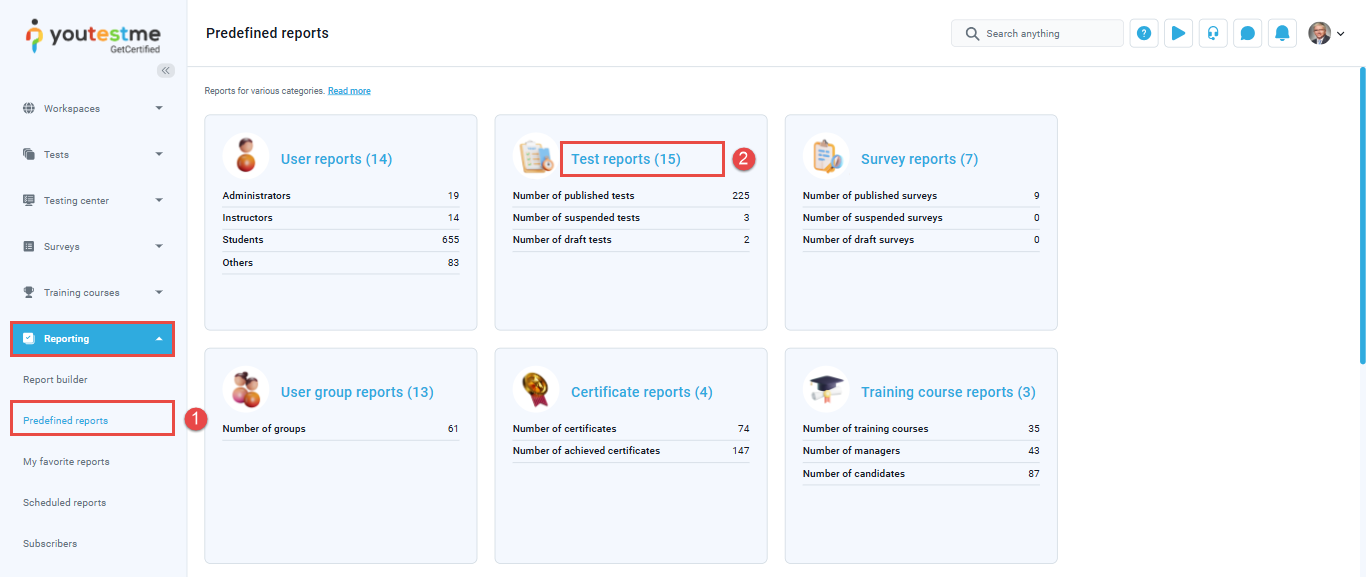

- Click the Reporting option in the main menu, and select Predefined Reports.

- Click on Test Reports.

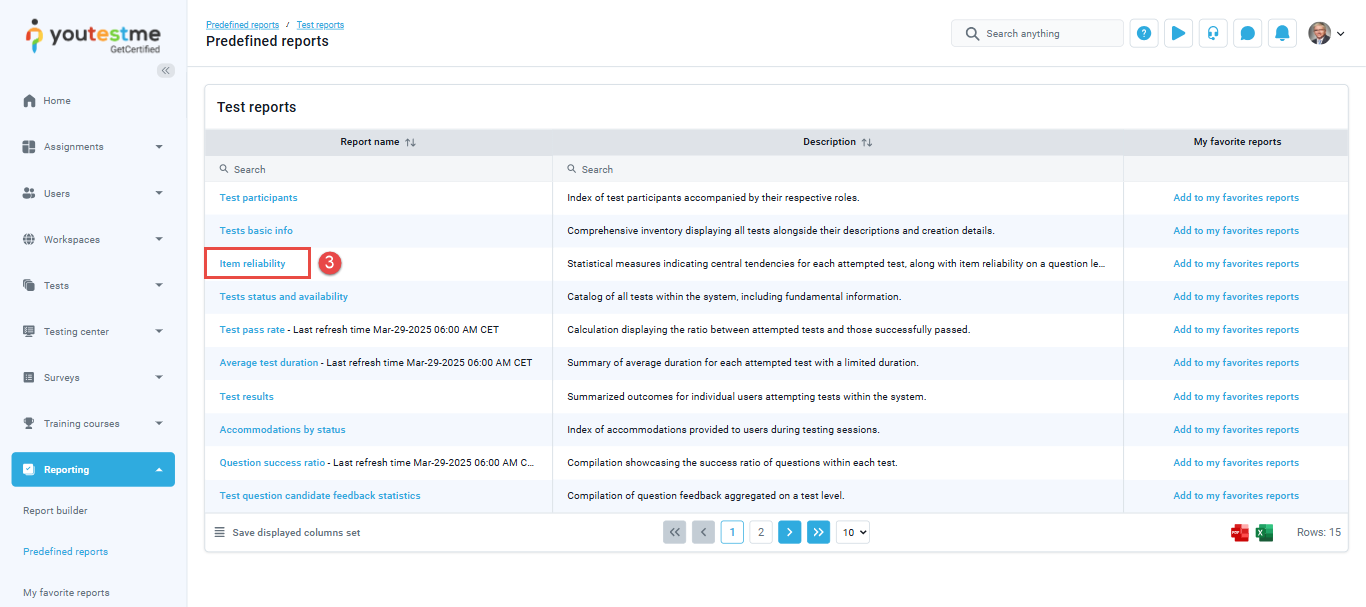

- Choose the Item reliability report.

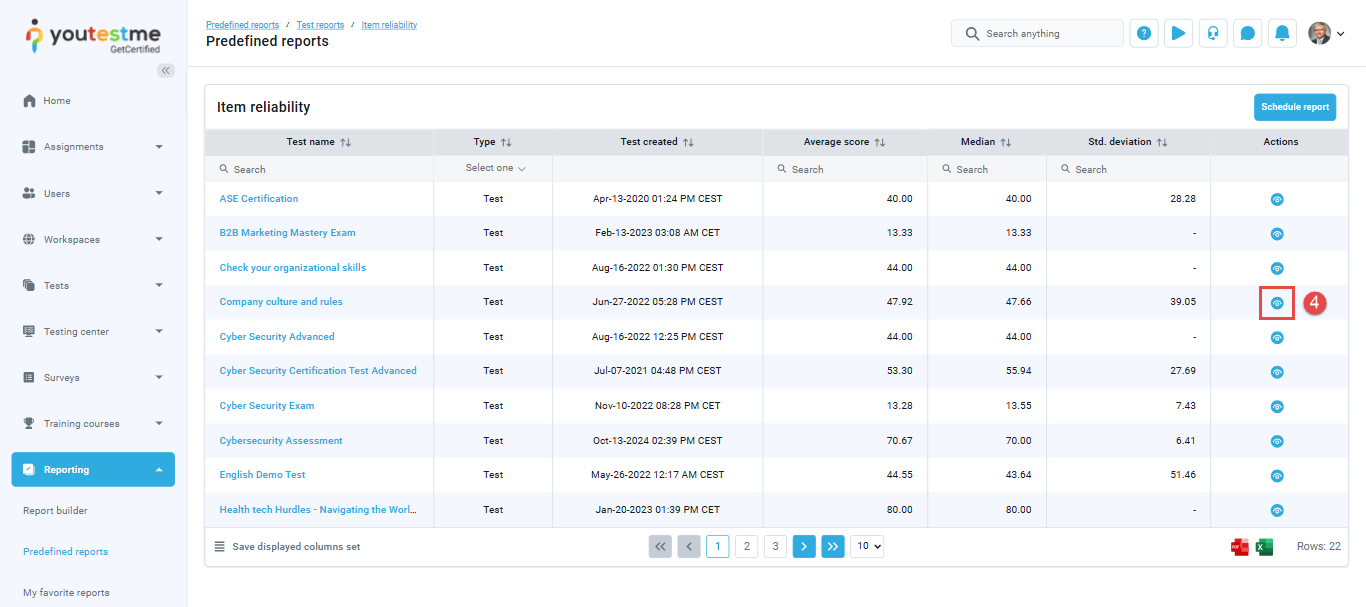

- Click the Details button to preview each question’s success ratio and item reliability.

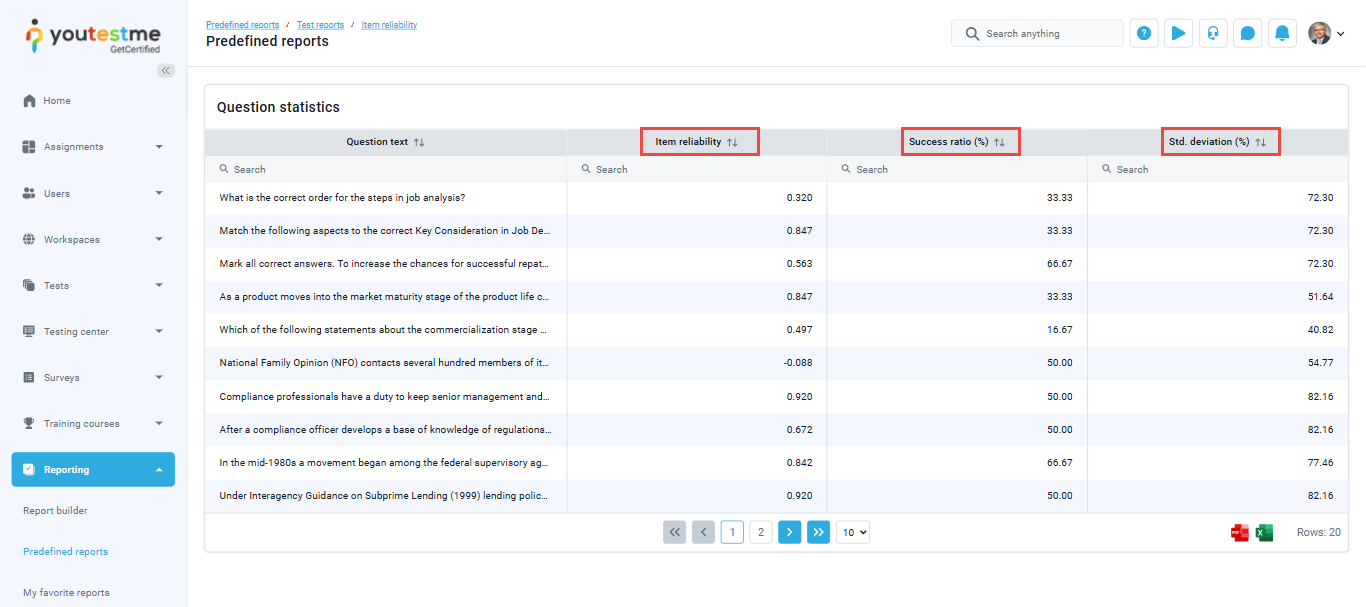

On the displayed screen, there are the following metrics shown:

- Item reliability is based on point-biserial correlation and will let you know how items correlate with the test as a whole, allowing you to identify the questions that do not belong in a test. When determining the correlation between the item and the test as a whole, the system correlates the responses on that item (dichotomous variable – correctly or incorrectly answered) with the test’s outcome (continuous variable – the score on a test). The values can range between -1 and 1. Negative values usually mean that:

-

- Candidates who got lower scores on the exam answered this question correctly.

-

- Candidates who got higher scores on the exam answered this question incorrectly.

In this case, the question does not correlate with the exam, and you may want to exclude it from the next exam versions or substitute it. Positive values usually mean that:

-

- Candidates who got higher scores on the exam answered this question correctly.

-

- Candidates who got lower scores on the exam answered this question incorrectly.

In this case, the question correlates with the exam and should be included in the next exam versions.

- The success ratio provides the percentage of candidates that answered the question correctly.

- Standard deviation measures the variability of success ratios for individual questions, helping identify inconsistent performance and correlations between question difficulty and overall test scores

Note: The item reliability report shows manually created tests.

Using report builder

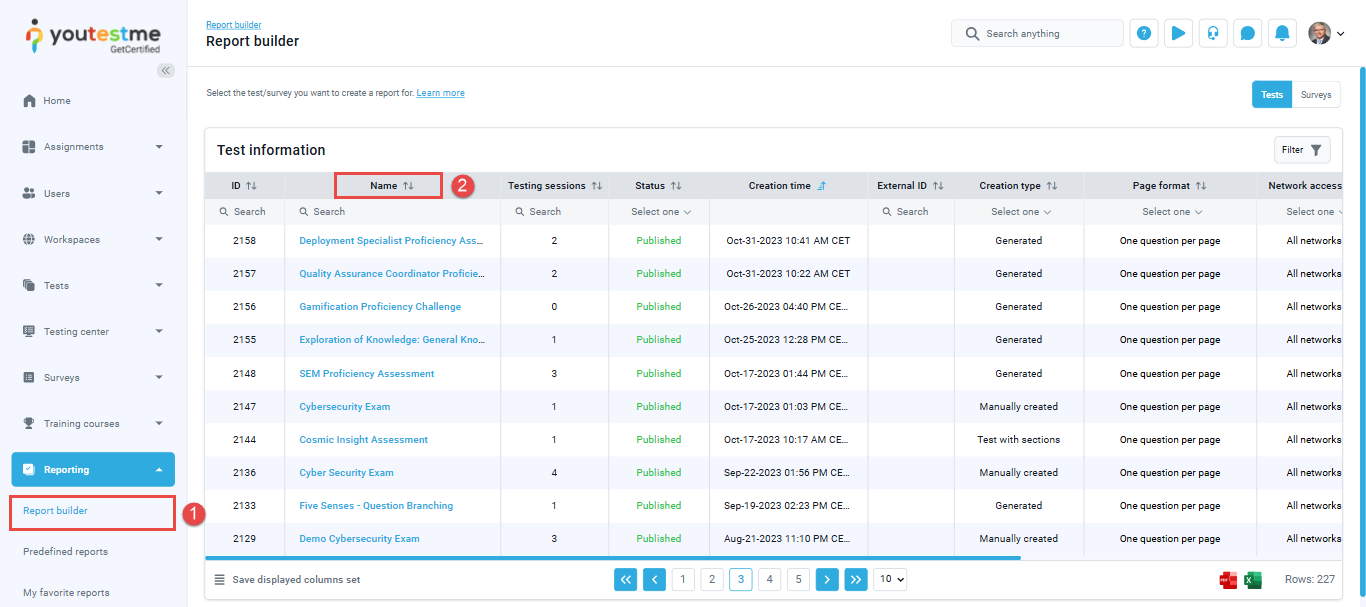

- To access the report builder from the main application menu, select the Reporting tab in the left-side menu and select the Report Builder tab.

- Select the test name from the Name column to display a Report builder for a specific test.

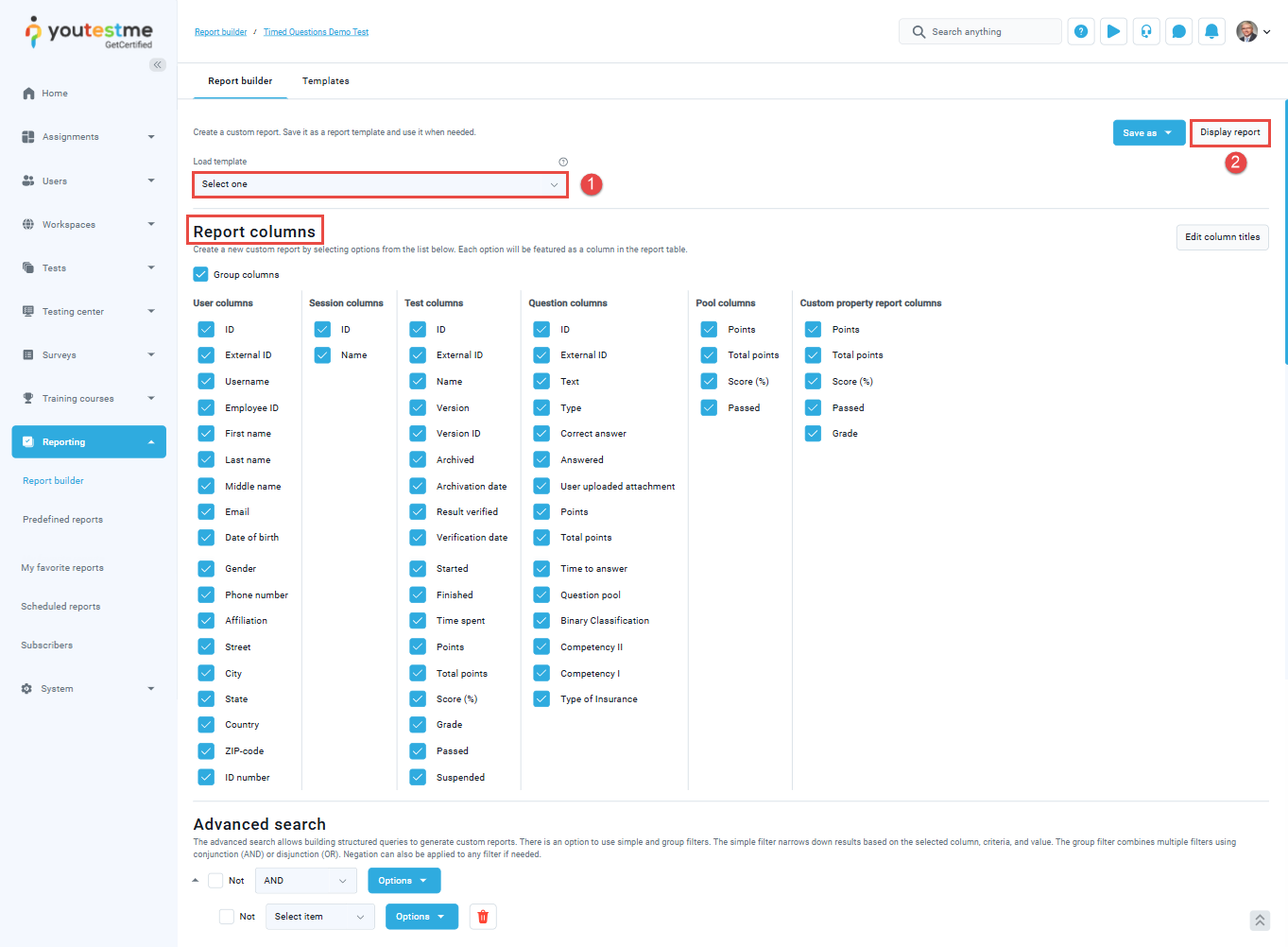

- On the Report Builder page, you can either load existing report templates or create a new custom report by selecting items from the list.

- Once you’ve selected the desired items, click the Display Report button.

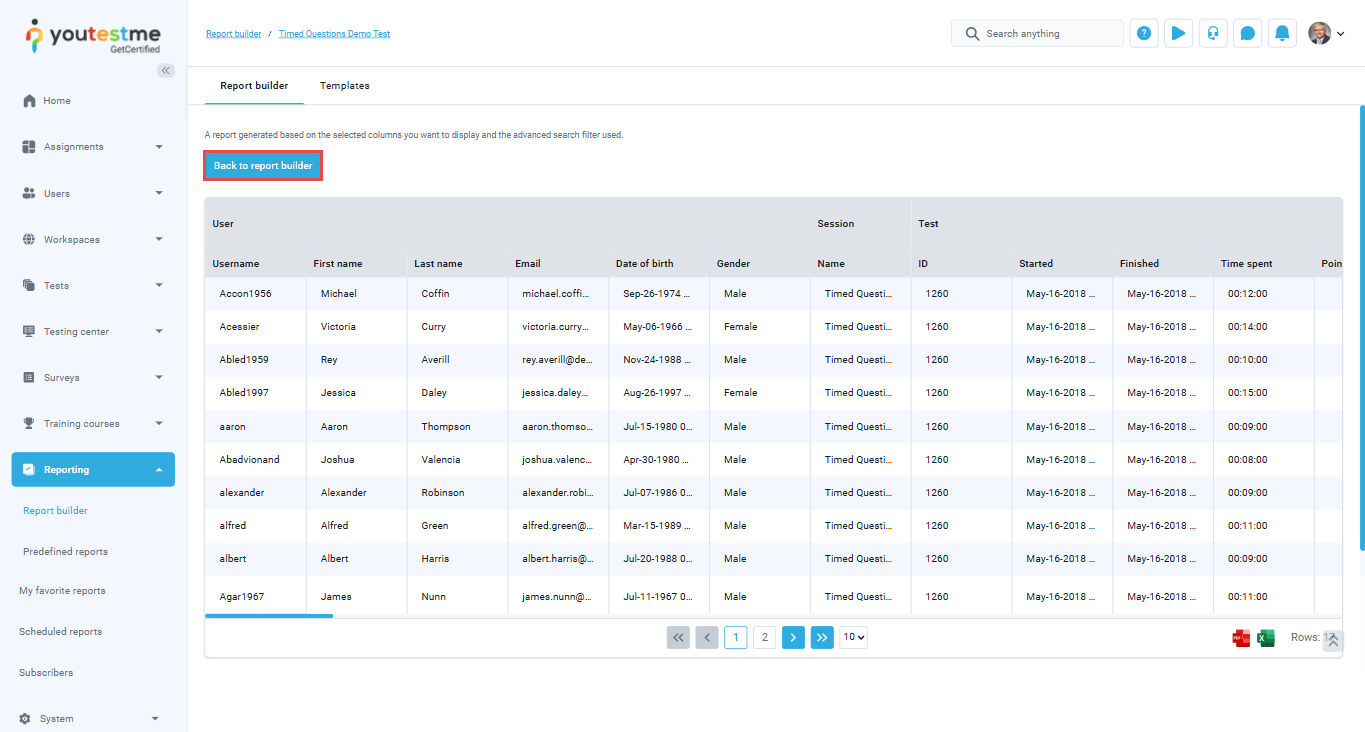

- Each selected item will appear as a column in the report table.

- To return to the Report Builder and make changes to the specific columns you want to appear, please click on Back to Report Builder.

For more instructions on how to use report builder, please see this video.

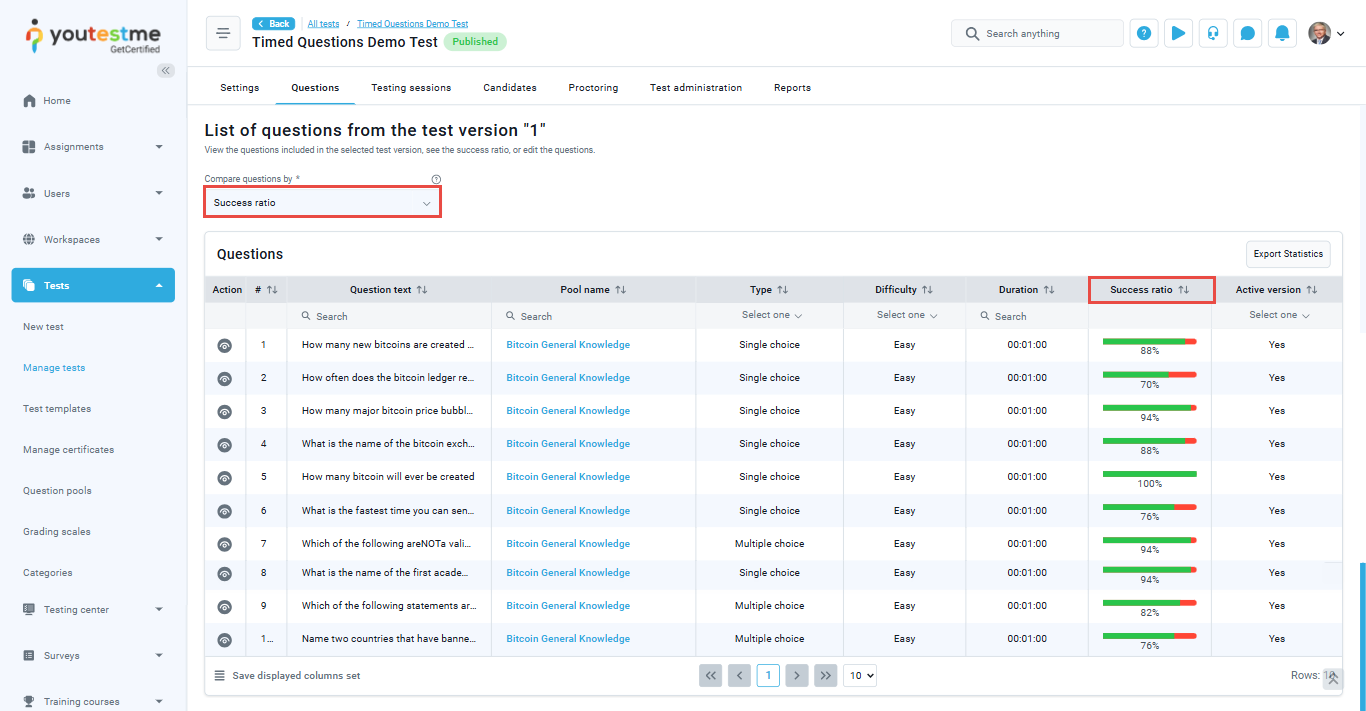

Statistics on Question Level

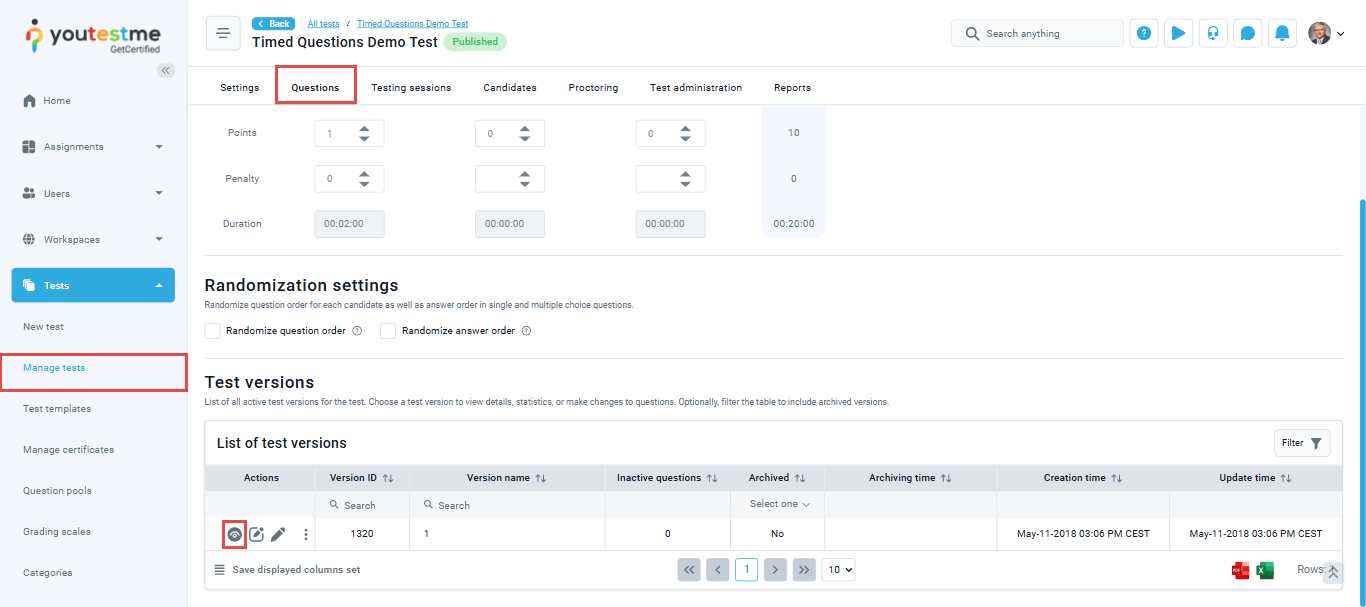

- Navigate to Test and select Manage Test.

- Open the desired test.

- Go to the Questions tab.

- In the list of test versions, click on the eye icon from the Action tab.

- Scroll down to view the statistics for each question in the test.

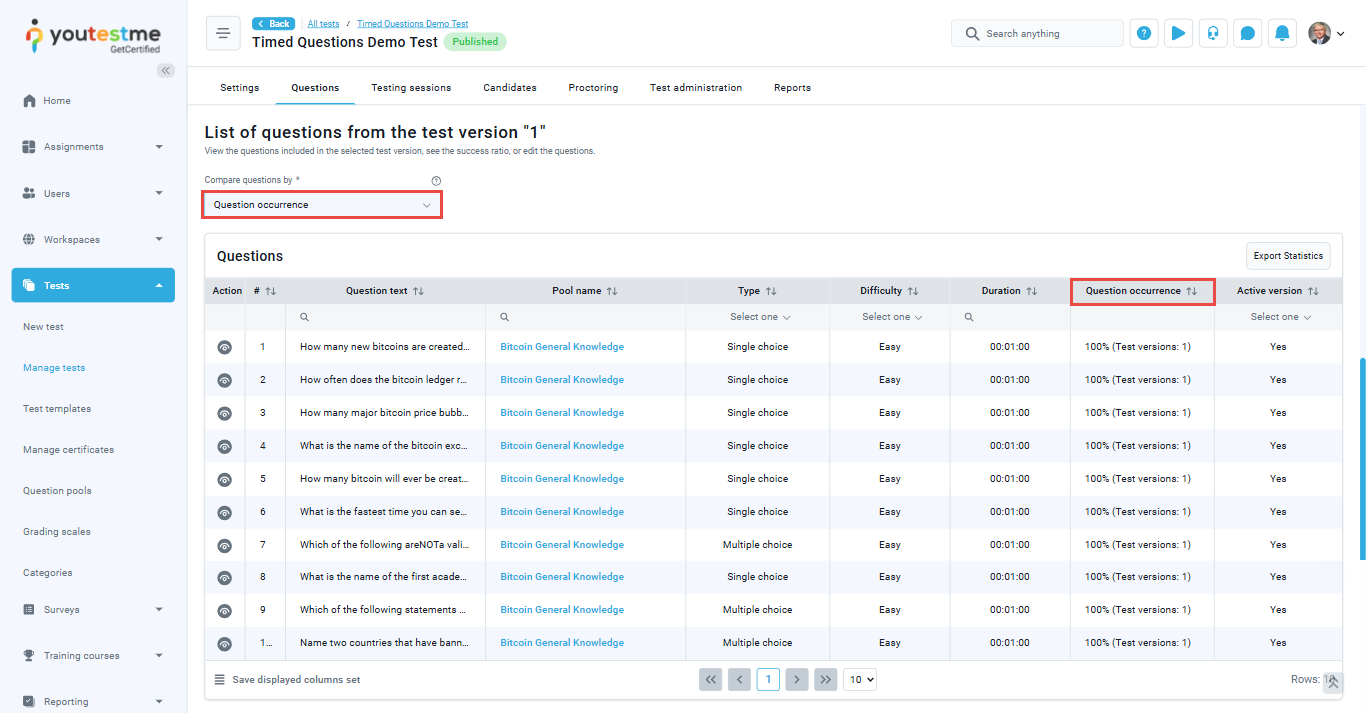

You have the option to compare questions by: success ratio, time to answer,question occurrence

Success ratio: a percentage of users who answered the question correctly.

Time to answer: The time spent on the question before an answer was provided.

Question occurrence: a percentage of test versions that contain the question.

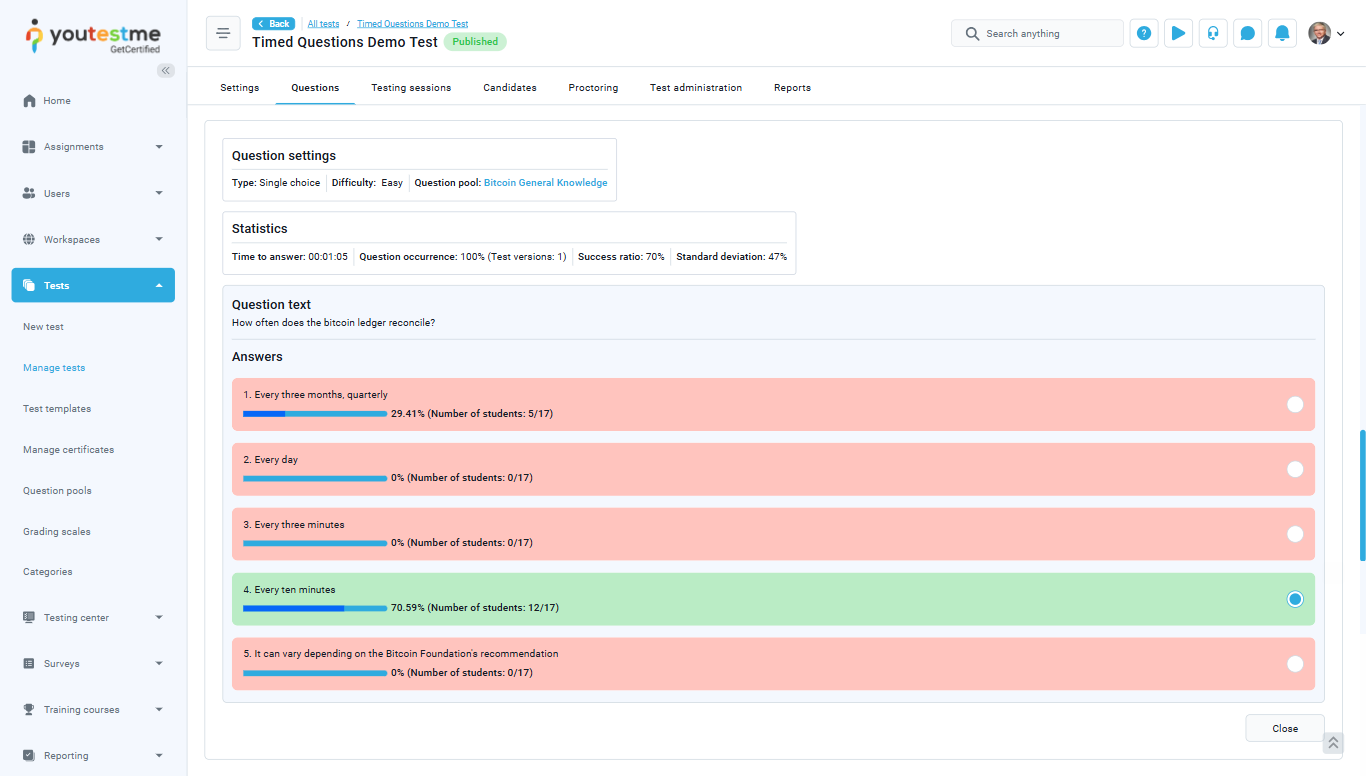

Statistics on Answer Level

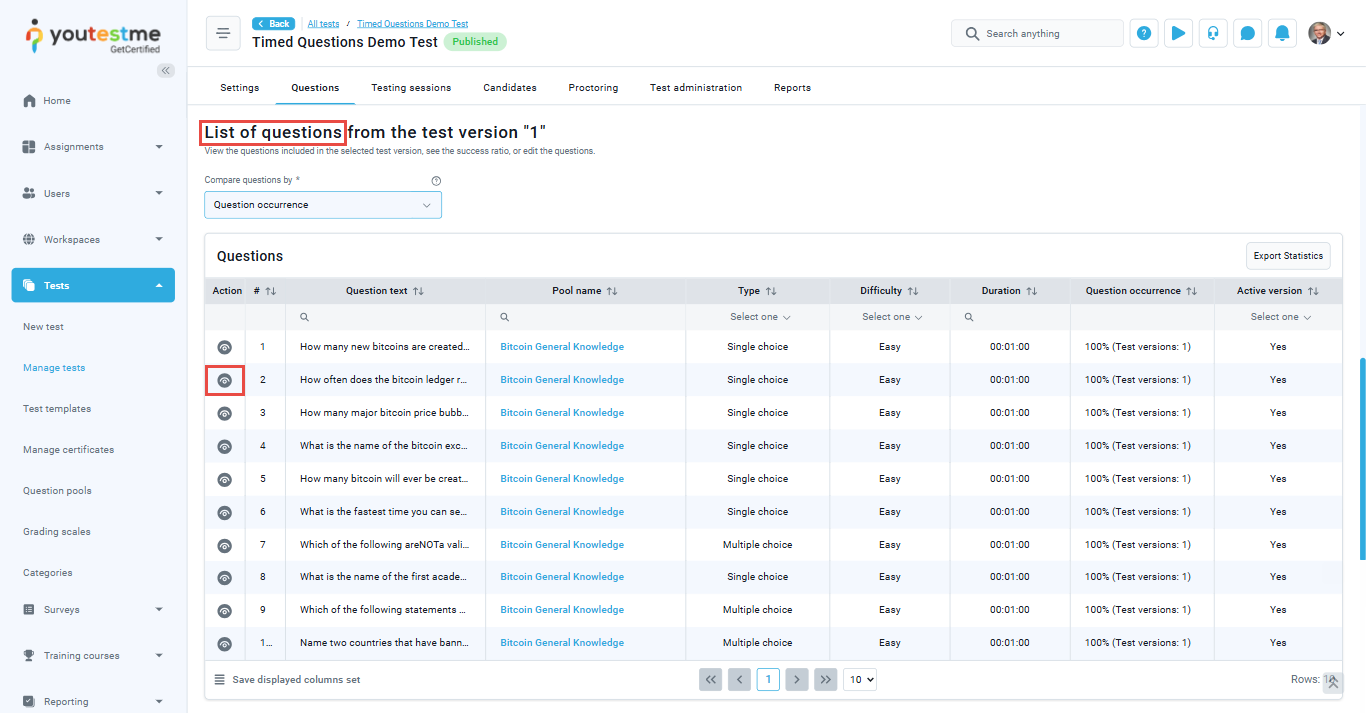

To see statistics at the answer level for each question, follow these steps:

- From the list of questions, choose the desired question.

- Click on the eye icon in the Action tab.

- Scroll down to view the statistics at the answer level.

For more useful instructional materials, please visit:

- How to Use Custom Question Properties in the Report Builder

- How to Create Custom Reports Using Report Builder

- How to Access and Use Predefined Reports